I used to run my own mail server. But then came the spammers. And dictionary attacks. All sorts of other nasty things. I finally gave up and turned to Gmail to maintain my online identities. Recently, one of my web servers has been attacked by a bot from a Russian IP address which will eventually force me to deploy sophisticated bot-detection. I'll probably have to turn to Google's recaptcha service, which watches users to check that they're not robots.

Isn't this how governments and nations formed? You don't need a police force if there aren't any criminals. You don't need an army until there's a threat from somewhere else. But because of threats near and far, we turn to civil governments for protection. The same happens on the web. Web services may thrive and grow because of economies of scale, but just as often it's because only the powerful can stand up to storms. Facebook and Google become more powerful, even as civil government power seems to wane.

When a company or institution is successful by virtue of its power, it needs governance, lest that power go astray. History is filled with examples of power gone sour, so it's fun to draw parallels. Wikipedia, for example, seems to be governed like the Roman Catholic Church, with a hierarchical priesthood, canon law, and sacred texts. Twitter seems to be a failed state with a weak government populated by rival factions demonstrating against the other factions. Apple is some sort of Buddhist monastery.

This year it became apparent to me that Facebook is becoming the internet version of a totalitarian state. It's become so ... needy. Especially the app. It's constantly inventing new ways to hoard my attention. It won't let me follow links to the internet. It wants to track me at all times. It asks me to send messages to my friends. It wants to remind me what I did 5 years ago and to celebrate how long I've been "friends" with friends. My social life is dominated by Facebook to the extent that I can't delete my account.

That's no different from the years before, I suppose, but what we saw this year is that Facebook's governance is unthinking. They've built a machine that optimizes everything for engagement and it's been so successful that they they don't know how to re-optimize it for humanity. They can't figure out how to avoid being a tool of oppression and propaganda. Their response to criticism is to fill everyone's feed with messages about how they're making things better. It's terrifying, but it could be so much worse.

I get the impression that Amazon is governed by an optimization for efficiency.

How is Google governed? There has never existed a more totalitarian entity, in terms of how much it knows about every aspect of our lives. Does it have a governing philosophy? What does it optimize for?

In a lot of countries, it seems that the civil governments are becoming a threat to our online lives. Will we turn to Wikipedia, Apple, or Google for protection? Or will we turn to civil governments to protect us from Twitter, Amazon and Facebook. Will democracy ever govern the Internet?

Happy 2019!

Monday, December 31, 2018

Thursday, December 27, 2018

Towards Impact-based OA Funding

Earlier this month, I was invited to a meeting sponsored by the Mellon Foundation about aggregating usage data for open-access (OA) ebooks, with a focus on scholarly monographs. The "problem" is that open licenses permit these ebooks to be liberated from hosting platforms and obtained in a variety of ways. A scholar might find the ebook via a search engine, on social media or on the publisher's web site; or perhaps in an index like Directory of Open Access Books (DOAB), or in an aggregator service like JSTOR. The ebook file might be hosted by the publisher, by OAPEN, on Internet Archive, Dropbox, Github, or Unglue.it. Libraries might host files on institutional repositories, or scholars might distribute them by email or via ResearchGate or discipline oriented sites such as Humanities Commons.

I haven't come to the "problem" yet. Open access publishers need ways to measure their impact. Since the whole point of removing toll-access barriers is to increase access to information, open access publishers look to their usage logs for validation of their efforts and mission. Unit sales and profits do not align very well with the goals of open-access publishing, but in the absence of sales revenue, download statistics and other measures of impact can be used to advocate for funding from institutions, from donors, and from libraries. Without evidence of impact, financial support for open access would be based more on faith than on data. (Not that there's anything inherently wrong with that.)

What is to be done? The "monograph usage" meeting was structured around a "provocation": that somehow a non-profit "Data Trust" would be formed to collect data from all the providers of open-access monographs, then channel it back to publishers and other stakeholders in privacy-preserving, value-affirming reports. There was broad support for this concept among the participants, but significant disagreements about the details of how a "Data Trust" might work, be governed, and be sustained.

Why would anyone trust a "Data Trust"? Who, exactly, would be paying to sustain a "Data Trust"? What is the product that the "Data Trust" will be providing to the folks paying to sustain it? Would a standardized usage data protocol stifle innovation in ebook distribution? We had so many questions, and there were so few answers.

I had trouble sleeping after the first day of the meeting. At 4 AM, my long-dormant physics brain, forged in countless all-nighters of problem sets in college, took over. It proposed a gendanken experiment:

For a long time, scholarly publishing was mostly funded by libraries that built great literature collections on behalf of their users - mostly scholars. This system incentivized the production of expensive must-have journals that expanded and multiplied so as to eat up all available funding from libraries. Monographs were economically squeezed in this process. Monographs, and the academic presses that published them, survived by becoming expensive, drastically reducing access for scholars.

With the advent of electronic publishing, it became feasible to flip the scholarly publishing model. Instead of charging libraries for access, access could be free for everyone, while authors paid a flat publication fee per article or monograph. In the journal world, the emergence of this system has erased access barriers. The publication fee system hasn't worked so well for monographs, however. The publication charge (much larger than an article charge) is often out of reach for many scholars, shutting them out of the open-access publishing process.

What if there was a funding channel for monographs that allocated support based on a measurement of impact, such as might be generated from data aggregated by a trusted "Data Trust"? (I'll call it the "OA Impact Trust", because I'd like to imagine that "impact" rather than a usage proxy such as "downloads" is what we care about.)

Here's how it might work:

The incentives built into such a system promote distribution and access. Publishers are encouraged to publish monographs that actually get used. Authors are encouraged to write in ways that promote reading and scholarship. Publishers are also encouraged to include their backlists in the system, and not just the dead ones, but the ones that scholars continue to use. Measured impact for OA publication rises, and libraries observe that more and more, their dollars are channeled to the material that their communities need.

Of course there are all sorts of problems with this gedanken OA funding scheme. If COUNTER statistics generate revenue, they will need to be secured against the inevitable gaming of the system and fraud. The system will have to make judgements about what sort of usage is valuable, and how to weigh the value of a work that goes viral against the value of a work used intensely by a very small community. Boundaries will need to be drawn. The machinery driving such a system will not be free, but it can be governed by the community of funders.

Do you think such a system can work? Do you thing such a system would be fair, or at least fairer than other systems? Would it be Good, or would it be Evil?

Notes:

I haven't come to the "problem" yet. Open access publishers need ways to measure their impact. Since the whole point of removing toll-access barriers is to increase access to information, open access publishers look to their usage logs for validation of their efforts and mission. Unit sales and profits do not align very well with the goals of open-access publishing, but in the absence of sales revenue, download statistics and other measures of impact can be used to advocate for funding from institutions, from donors, and from libraries. Without evidence of impact, financial support for open access would be based more on faith than on data. (Not that there's anything inherently wrong with that.)

What is to be done? The "monograph usage" meeting was structured around a "provocation": that somehow a non-profit "Data Trust" would be formed to collect data from all the providers of open-access monographs, then channel it back to publishers and other stakeholders in privacy-preserving, value-affirming reports. There was broad support for this concept among the participants, but significant disagreements about the details of how a "Data Trust" might work, be governed, and be sustained.

Why would anyone trust a "Data Trust"? Who, exactly, would be paying to sustain a "Data Trust"? What is the product that the "Data Trust" will be providing to the folks paying to sustain it? Would a standardized usage data protocol stifle innovation in ebook distribution? We had so many questions, and there were so few answers.

I had trouble sleeping after the first day of the meeting. At 4 AM, my long-dormant physics brain, forged in countless all-nighters of problem sets in college, took over. It proposed a gendanken experiment:

What if there was open-access monograph usage data that everyone really trusted? How might it be used?The answer is given away in the title of this post, but let's step back for a moment to provide some context.

For a long time, scholarly publishing was mostly funded by libraries that built great literature collections on behalf of their users - mostly scholars. This system incentivized the production of expensive must-have journals that expanded and multiplied so as to eat up all available funding from libraries. Monographs were economically squeezed in this process. Monographs, and the academic presses that published them, survived by becoming expensive, drastically reducing access for scholars.

With the advent of electronic publishing, it became feasible to flip the scholarly publishing model. Instead of charging libraries for access, access could be free for everyone, while authors paid a flat publication fee per article or monograph. In the journal world, the emergence of this system has erased access barriers. The publication fee system hasn't worked so well for monographs, however. The publication charge (much larger than an article charge) is often out of reach for many scholars, shutting them out of the open-access publishing process.

What if there was a funding channel for monographs that allocated support based on a measurement of impact, such as might be generated from data aggregated by a trusted "Data Trust"? (I'll call it the "OA Impact Trust", because I'd like to imagine that "impact" rather than a usage proxy such as "downloads" is what we care about.)

Here's how it might work:

- Libraries and institutions register with the OA Impact Trust, providing it with a way to identify usage and impact relevant to the library or institutions.

- Aggregators and publishers deposit monograph metadata and usage/impact streams with the Trust.

- The Trust provides COUNTER reports (suitably adapted) for relevant OA monograph usage/impact to libraries and institutions. This allows them to compare OA and non-OA ebook usage side-by-side.

- Libraries and institutions allocate some funding to OA monographs.

- The Trust passes funding to monograph publishers and participating distributors.

The incentives built into such a system promote distribution and access. Publishers are encouraged to publish monographs that actually get used. Authors are encouraged to write in ways that promote reading and scholarship. Publishers are also encouraged to include their backlists in the system, and not just the dead ones, but the ones that scholars continue to use. Measured impact for OA publication rises, and libraries observe that more and more, their dollars are channeled to the material that their communities need.

Of course there are all sorts of problems with this gedanken OA funding scheme. If COUNTER statistics generate revenue, they will need to be secured against the inevitable gaming of the system and fraud. The system will have to make judgements about what sort of usage is valuable, and how to weigh the value of a work that goes viral against the value of a work used intensely by a very small community. Boundaries will need to be drawn. The machinery driving such a system will not be free, but it can be governed by the community of funders.

Do you think such a system can work? Do you thing such a system would be fair, or at least fairer than other systems? Would it be Good, or would it be Evil?

Notes:

- Details have been swept under a rug the size of Afghanistan. But this rug won't fly anywhere unless there's willingness to pay for a rug.

- The white paper draft which was the "provocation" for the meeting is posted here.

- I've been thinking about this for a while.

Posted by

Eric

at

2:30 PM

3

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

Book Use,

ebooks,

Open Access,

scholarly publishing

Tuesday, October 30, 2018

A Milestone for GITenberg

GITenberg is a prototype that explores how Project Gutenberg might work if all the Gutenberg texts were on Github, so that tools like version control, continuous integration, and pull-request workflow could be employed. We hope that Project Gutenberg can take advantage of what we've learned; work in that direction has begun but needs resources and volunteers. Go check it out!

It's hard to believe, but GITenberg started 6 years ago when Seth Woodworth started making Github repos for Gutenberg texts. I joined the project two years later when I started doing the same and discovered that Seth was 43,000 repos ahead of me. The project got a big boost when the Knight Foundation awarded us a Prototype Fund grant to "explore the applicability of open-source methodologies to the maintenance of the cultural heritage" that is the Project Gutenberg collection. But there were big chunks of effort left to finish the work when that grant ended. Last year, six computer-science seniors from Stevens Institute of Technology took up the challenge and brought the project within sight of a major milestone (if not the finishing-line). There remained only the reprocessing of 58,000 ebooks (with more being created every day!). As of last week, we've done that! Whew.

So here's what's been done:

- Almost 57,000 texts from Project Gutenberg have been loaded into Github repositories.

- EPUB, PDF, and Kindle Ebooks have been rebuilt and added to releases for all but about 100 of these.

- Github webhooks trigger dockerized ebook building machines running on AWS Elastic Beanstock every time a git repo is tagged.

- Toolchains for asciidoc, HTML and plain text source files are running on the ebook builders.

- A website at https://www.gitenberg.org/ uses the webhooks to index and link to all of the ebooks.

- www.gitenberg.org presents links to Github, Project Gutenberg, Librivox, and Standard Ebooks.

- Cover images are supplied for every ebook.

- Human-readable metadata files are available for every ebook

- Syndication feeds for these books are made available in ONIX, MARC and OPDS via Unglue.it.

Everything in this project is built in the hope that the bits can be incorporated into Project Gutenberg wherever appropriate. In January 2019, the US public domain will resume the addition of new books, so it's more important than ever that we strengthen the infrastructure that supports it.

Some details:

- All of the software that's been used is open source and content is openly licensed.

- PG's epubmaker software has been significantly strengthened and improved.

- About 200 PG ebooks have had fatal formatting errors remediated to allow for automated ebook file production.

- 1,363 PG ebooks were omitted from this work due to licensing or because they aren't really books.

- PG's RDF metadata files were converted to human-readable YAML and enhanced with data from New York Public Library and from Wikipedia.

- Github API throttling limits the build/release rate to about 600 ebooks/hour/login. A full build takes about 4 full days with one github login.

- Seth Woodworth. In retrospect, the core idea was obvious, audacious, and crazy. Like all great ideas.

- Github tech support. Always responsive.

- The O'Reilly HTMLBook team. The asciidoc toolchain is based on their work.

- Plympton. Many asciidoc versions were contributed to GITenberg as part of the "Recovering the Classics" project. Thanks to Jenny 8. Lee, Michelle Cheng, Max Pevner and Nessie Fox.

- Albert Carter and Paul Moss contributed to early versions of the GITeneberg website.

- The Knight Foundation provided funding for GITenberg at a key juncture in the project's development though its prototype fund. The Knight Foundation supports public-benefitting innovation in so many ways even beyond the funding it provides, and we thank them with all our hearts.

- Travis-CI. The first version of automated ebook building took advantage of Travis-CI. Thanks!

- Raymond Yee got the automated ebook building to actually work.

- New York Public Library contributed descriptions, rights info, and generative covers. They also sponsored hackathons that significantly advanced the environment for public domain books. Special thanks to Leonard Richardson, Mauricio Giraldo and Jens Troeger (Bookalope).

- My Board at the Free Ebook Foundation: Seth, Vicky Reich, Rupert Gatti, Todd Carpenter, Michael Wolfe and Karen Liu. Yes, we're overdue for a board meeting...

- The Stevens GITenberg team: Marc Gotliboym, Nicholas Tang-Mifsud, Brian Silverman, Brandon Rothweiler, Meng Qiu, and Ankur Ramesh. They redesigned the gitenberg.org website, added search, added automatic metadata updates, and built the dockerized elastic beanstalk ebook-builder and queuing system. This work was done as part of their two-semester capstone (project) course. The course is taught by Prof. David Klappholz, who managed a total of 23 student projects last academic year. Students in the course design and develop software for established companies, early stage startups, nonprofits, gov't agencies, etc., etc. Take a look at detailed information about software that has been developed over the past 6-7 years and details of how the course works.

- Last, but certainly not least, Greg Newby (Project Gutenberg) for consistent encouragement and tolerance of our nit-discovery, Juliet Sutherland (Distributed Proofreaders) for her invaluable insights into how PG ebooks get made, and to the countless volunteers at both organizations who collectively have made possible the preservation and reuse of our public domain.

So what's next? As I mentioned, we've taken some baby steps towards applying version control to Project Gutenberg. But Project Gutenberg is a complex organism, and implementing profound changes will require broad consensus-building and resource gathering (both money and talent). Project Gutenberg and the Free Ebook Foundation are very lean non-profit organizations dependent on volunteers and small donations. What's next is really up to you!

Posted by

Eric

at

11:45 AM

0

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

Gitenberg,

GitHub,

Open Source,

Project Gutenberg

Tuesday, September 18, 2018

eBook DRM and Blockchain play CryptoKitty and Mouse. And the Winner is...

If you want to know how blockchain relates to DRM and ebooks, it helps to understand CryptoKitties.

CryptoKitties are essentially numbers that live in a game-like environment which renders cats based on the numbers. Players can buy, collect, trade, and breed their kitties. Each kitty is unique. Players let their kitties play games in the "kittyverse". Transactions involving CryptoKitties take place on the Ethereum blockchain. Use of the blockchain make CryptoKitties different from other types of virtual property. The kitties can be traded outside of the game environment, and the kitties can't be confiscated or deleted by the game developers. In fact, the kitties could easily live in third-party software environments, though they might not carry their in-game attributes with them. Over 12 million dollars has been spent on CryptoKitties, and while you might assume they're a passing fad, they haven't gone away.

It's weird to think about "digital rights management" (DRM) for CryptoKitties. Cryptography locks a kitty to a user's cryptocurrency wallet, but you can transfer a wallet to someone else by giving them your secret keys. With the key, you can do anything with the contents of the wallet. The utility of your CryptoKitty (your "digital rights") is managed by a virtual environment controlled by Launch Labs, Inc., but until the kitties become sentient (15-20 years?) the setup doesn't trigger my distaste for DRM.

Now, think about how Amazon's Kindle works. When you buy an ebook from Amazon, what you're paying for is a piece of virtual property that only exists in the Kindle virtual world. The Kindle software environment endows your virtual property with value - but instead of giving you the right to breed a kitty, you might get the right to read about a kitty. You're not allowed to exercise this right outside of Amazon's virtual world, and DRM exists to enforce Amazon's control of that right. You can't trade or transfer this right.

Ebooks are are different from virtual property, in important ways. Ebooks are words, ideas, stories that live just fine outside Kindle. DRM kills this outside life away, which is a sin. And it robs readers of the ability to read without Big Brother keeping track of every page they read. Most authors and publishers see DRM as a necessary evil, because they don't believe in a utopia where readers pay creators just because they're worth it.

But what if were possible to "CryptoKittify" ebooks? Would that mitigate the sins of DRM, or even render it unnecessary? Would it just add the evils of blockchain to the evils of DRM? Two startups, Publica and Scenarex are trying to find out.

Depending on implementation, the "CryptoKittification" of ebooks could allow enhanced privacy and property rights for purchasers as well as transaction monitoring for rights holders. If a user's right to an ebook was registered on a blockchain, a reader application wouldn't need to "phone home"

to check whether a user was entitled to open and use the ebook. Similarly, the encrypted ebook files could be stored on a distributed service such as IPFS, or on a publisher's distribution site. The reader platform provider needn't separately verify the user. And just like printed books, a reader license could be transferred or sold to another user.

Alas, the DRM devil is always in the details, which is why I quizzed both Scenarex and Publica about their implementations. The two companies have taken strikingly different approaches to the application of blockchain to the ebook problem.

Scenarex, a company based in Montreal, has strived to make their platform familiar to both publishers and to readers. You don't need to have cryptocurrency or a crypto-wallet to use their system, called "Bookchain". Their website will look like an online bookstore, and their web-based reader application will use ebooks in the EPUB format rendered by the open-source Readium software being used by other ebook websites. All of the interaction with the blockchain will be handled in their servers. The affordances of their user-facing platform, at least in its initial form, should be very similar to other Readium-powered sites. For users, the only differences will be the license transfer options enabled by the blockchain and its content providers. Because the licenses will be memorialized on a blockchain the possibility is open that they could be used in other reading environments.

Scenarex's conservative approach of hiding most of blockchain from the users and rights holders, means that almost all of Scenarex's blockchain-potential is as-yet unrealized. There's no significant difference in privacy compared to Readium's LCP DRM scheme. License portability and transactions will depend on whether other providers decide to adopt Scenarex's license tokenization and publication scheme. Because blockchain interaction takes place behind Scenarex servers, the problems with blockchain immutability are mitigated along with the corresponding benefits to the purchaser. Scenarex expects to launch soon, but it's still too early to see if they can gain any traction.

Publica, by contrast, has chosen to propose a truly radical course for the ebook industry. Publica, with development offices is Latvia, doesn't make sense if you think of it as an ebook store, it only makes sense if you think of it as a crowd-funding platform for ebooks. (Disclosure: Unglue.it, a website I founded and run as part of the Free Ebook Foundation, started life as a crowd-funding platform for free ebooks.)

Publica invites authors to create "initial coin offerings" (ICOs) for their books. An author raising funds for their book sells read tokens for the book to investors, presumably in advance of publication. When the book is published, token owners get to read the book. Tokens can be traded or sold in Ethereum blockchain-backed transactions.

From an economic point of view, this doesn't seem to make much sense. If the token marketplace is efficient, the price of a token will fluctuate until the supply of tokens equals the number of people who want continuing access to the book. Sell too many tokens, and the price crashes to near zero. In today's market for books, buyers are motivated by word of mouth, so newly published books, especially by unknown authors, are given out free to reviewers and other influencers. To make money with an ICO, in contrast, an author will need to limit the supply so as to support the token's attractiveness to investors, and thus the book's price.

In many ways, however, book purchasers don't act like economists. They keep their books around forever. They accumulate TBR piles. Yes, they'll give away or sell books, but that is typically to enable further accumulation. They'll borrow a book from the library, read it, and THEN buy it. Book purchasers collect books. Which brings us back to CryptoKitties.

In May of 2018, a CryptoKitty sold at auction for over $140,000. That's right, someone paid 6 figures for what is essentially a number! Can you imagine someone paying that much for a copy of a book?

I can imagine that. In 2001, a First Folio edition of Shakespeare's plays sold for over $6,000,000! Suppose that J. K. Rowling had sold 100 digital first editions of Harry Potter and the Philosopher's Stone in 1996 to make ends meet. How much do you think someone would pay for one of those today, assuming the provenance and "ownership" could be unassailably verified?

I can imagine that. In 2001, a First Folio edition of Shakespeare's plays sold for over $6,000,000! Suppose that J. K. Rowling had sold 100 digital first editions of Harry Potter and the Philosopher's Stone in 1996 to make ends meet. How much do you think someone would pay for one of those today, assuming the provenance and "ownership" could be unassailably verified?CryptoKitties might be cute and they might have rare characteristics, but many more people develop powerful emotional attachments to books, even if they're just words or files full of bytes. A First Folio is an important historical artifact because of the huge cultural impact of the words it memorializes. I think it's plausible that a digital artifact could be similarly important, especially if its original sale provided support to its artist.

This brings me back to DRM. I asked the CTO of Publica, Yuri Pimenov about it, and he seemed apologetic.

Even Amazon's DRM can be easily removed (I did it once). So, let's assume that DRM is a little inconvenience that [...] people are ready to pay [to get around]. And besides the majority of people are good and understand that authors make a living by writing books...Publica's app uses a cryptographic token in the Blockchain to allow access to the book contents, and does DRM-ish things like disabling quoting. But since the cryptographic token is bound to a cryptographic wallet, not a device or an account, it just papers over author concerns such as piracy. Pimenov is correct to note that it's the reader's relationship to the author that should be cemented by the Publica marketplace. Once Publica understands that memorializing readers supporting authors is where their success can come from, I think they'll realize that DRM, by restricting readers and building moats around literature, is counterproductive. To make an ebook into a collectable product, we don't need DRM, we need need "DRMem": Digital Rights Memorialization.

So, I'm surprised to be saying this, but... CryptoKitties win!

More Links:

- Can Blockchain Disrupt The E-Book Market? Two Startups Will Find Out - Bill Rosenblatt

- Blockchain Comes to E-Books, DRM Included - Bill Rosenblatt

- London Book Fair 2018: Meet the World’s First #1 Bestselling ‘Blockchain’ Author - Andrew Albanese

- The Content Blockchain Project

- BitRights - Blockchain Digital Content

- Civil - The Decentralized Marketplace for Sustainable Journalism

Thursday, August 2, 2018

My Face is Personally Identifiable Information

Facial recognition technology used to be so adorable. When I wrote about it 7 years ago, the facial recognition technology in iPhoto was finding faces in shrubbery, but was also good enough to accurately see family resemblances in faces carved into a wall. Now, Apple thinks it's good enough to use for biometric logins, bragging that "your face is your password".

I think this will be my new password:

The ACLU is worried about the civil liberty implications of facial recognition and the machine learning technology that underlies it. I'm worried too, but for completely different reasons. The ACLU has been generating a lot of press as they articulate their worries - that facial recognition is unreliable, that it's tainted by the bias inherent in its training data, and that it will be used by governments as a tool of oppression. But I think those worries are short-sighted. I'm worried that facial recognition will be extremely accurate, that its training data will be complete and thus unbiased, and that everyone will be using it everywhere on everyone else and even an oppressive government will be powerless to preserve our meager shreds of privacy.

We certainly need to be aware of the ways in which our biases can infect the tools we build, but the ACLU's argument against facial recognition invites the conclusion that things will be just peachy if only facial recognition were accurate and unbiased. Unfortunately, it will be. You don't have to read Cory Doctorow's novels to imagine a dystopia built on facial recognition. The progression of technology is such that multiple face recognizer networks could soon be observing us where ever we go in the physical world - the same way that we're recognized at every site on the internet via web beacons, web profilers and other spyware.

The problem with having your face as your password is that you can't keep your face secret. Faces aren't meant to be secret. Our faces co-evolved with our brains to be individually recognizable; evidently, having an identity confers a survival advantage. Our societies are deeply structured around our ability to recognize other people by their faces. We even put faces on our money!

Facial recognition is not new at all, but we need to understand the ways in which machines doing the recognizing will change the fabric of our societies. Let's assume that the machines will be really good at it. What's different?

For many applications, the machine will be doing things that people already do. Putting a face-recognizing camera on your front door is just doing what you'd do yourself in deciding whether to open it. Maybe using facial recognition in place of a paper driver's license or passport would improve upon the performance of a TSA agent squinting at that awful 5-year-old photo of you. What's really transformative is the connectivity. That front-door camera will talk to Fedex's registry of delivery people. When you use your face at your polling place, the bureau of elections will make sure you don't vote anywhere else that day. And the ID-check that proves you're old enough to buy cigarettes will update your medical records. What used to identify you locally can now identify you globally.

The reason that face-identity is so scary is that it's a type of identifier that has never existed before. It's globally unique, but it doesn't require a central registry to be used. It's public, easily collected and you can't remove it. It's as if we all had to tattoo our

We can't stop facial recognition technology any more than we can reverse global warming, but we can start preparing today. We need to start by treating facial profiles and photographs as personally identifiable information. We have some privacy laws that cover so-called "PII", and we need to start applying them to photographs and facial recognition profiles. We can also impose strict liability for the misuse of biased inaccurate facial recognition; slowing down the adoption of facial recognition technology will give our society a chance to adjust to its consequences.

Oh, and maybe Denmark's new law against niqabs violates GDPR?

Posted by

Eric

at

11:31 AM

0

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

Amazon Web Services,

identifiers,

privacy,

public identity,

technology

Friday, June 8, 2018

The Vast Potential for Blockchain in Libraries

There is absolutely no use for "blockchain technology" in libraries. NONE. Zip. Nada. Fuhgettaboutit. Folks who say otherwise are either dishonest, misinformed, or misleadingly defining "blockchain technology" as all the wonderful uses of digital signatures, cryptographic hashes, peer-to-peer networks, zero-knowledge proofs, hash chains and Merkle trees. I'm willing to forgive members of this third category of crypto-huckster because libraries really do need to learn about all those technologies and put them to good use. Call it NotChain, and I'm all for it.

It's not that blockchain for libraries couldn't work, it's that blockchain for libraries would be evil. Let me explain.

All the good attributes ascribed to magical "blockchain technology" are available in "git", a program used by software developers for distributed version control. The folks at GitHub realized that many problems would benefit from some workflow tools layered on top of the git, and they're now being acquired for several billion dollars by Microsoft, which is run by folks who know a lot about that digital crypto stuff.

Believe it or not, blockchains and git repos are both based on Merkle trees, which use cryptographic hashes to indelibly tie one information packet (a block or a commit) to a preceding information packet. The packets are thus arranged in a tree. The difference between the two is how they achieve consensus (how they prune the tree).

Blockchains strive to grow a single branch (thus, the tree becomes a chain). They reach consensus by adding packets according to the computing power of nodes that want to add a packet (proof of work) or to the wealth of nodes that want to add a packet (proof of stake). So if you have a problem where you want a single trunk (a ledger) whose control is allocated by wealth or power, blockchain may be an applicable solution.

Git repos take a different approach to consensus; git makes it easy to make a new branch (or fork) and it makes it easy to merge branches back together. It leaves the decision of whether to branch or merge mostly up to humans. So if you have a problem where you need to reach consensus (or disagreement) about information by the usual (imperfect) ways of humans, git repos are possibly the Merkle trees you need.

I think library technology should not be enabling consensus on the basis of wealth or power rather than thought and discussion. That would be evil.

Notes:

1. Here are some good articles about git and blockchain:

2. Why is "blockchain" getting all the hype, instead of "Merkle trees" or "git"? I can think of three reasons:

It's not that blockchain for libraries couldn't work, it's that blockchain for libraries would be evil. Let me explain.

|

| A Merkle tree. (from Wikipedia) |

Believe it or not, blockchains and git repos are both based on Merkle trees, which use cryptographic hashes to indelibly tie one information packet (a block or a commit) to a preceding information packet. The packets are thus arranged in a tree. The difference between the two is how they achieve consensus (how they prune the tree).

Blockchains strive to grow a single branch (thus, the tree becomes a chain). They reach consensus by adding packets according to the computing power of nodes that want to add a packet (proof of work) or to the wealth of nodes that want to add a packet (proof of stake). So if you have a problem where you want a single trunk (a ledger) whose control is allocated by wealth or power, blockchain may be an applicable solution.

Git repos take a different approach to consensus; git makes it easy to make a new branch (or fork) and it makes it easy to merge branches back together. It leaves the decision of whether to branch or merge mostly up to humans. So if you have a problem where you need to reach consensus (or disagreement) about information by the usual (imperfect) ways of humans, git repos are possibly the Merkle trees you need.

I think library technology should not be enabling consensus on the basis of wealth or power rather than thought and discussion. That would be evil.

Notes:

1. Here are some good articles about git and blockchain:

- Blockchain: Under the Hood - Justin Ramos

- Is a Git Repository a Blockchain? - Danno Ferrin

- Is Git a Blockchain? - Dave Mercer

- The Blockchain, From a Git Perspective - Jackson Kelley

2. Why is "blockchain" getting all the hype, instead of "Merkle trees" or "git"? I can think of three reasons:

- "git" is a funny name.

- "Merkle" is a funny name.

- Everyone loves Lego blocks!

3. I wrote an article about what the library/archives/publishing world can learn from bitcoin. It's still good.

Posted by

Eric

at

12:12 PM

0

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

Bitcoin,

blockchain,

Cryptography,

library automation,

magic

Friday, May 18, 2018

The Shocking Truth About RA21: It's Made of People!

|

| Useful Utilities logo from 2004 |

He was wrong. IP address authentication and EZProxy, now owned and managed by OCLC, are still the access management mainstays for libraries in the age of the internet. IP authentication allows for seamless access to licensed resources on a campus, while EZProxy allows off-campus users to log in just once to get similar access. Meanwhile, Shibboleth, OpenAthens and similar solutions remain feature-rich systems with clunky UIs and little mainstream adoption outside big rich publishers, big rich universities and the UK, even as more distributed identity technologies such as OAuth and OpenID have become ubiquitous thanks to Google, Facebook, Twitter etc.

|

| from My Book House, Vol. I: In the Nursery, p. 197. |

- IP authentication imposes significant administrative burdens on both libraries and publishers. On the library side, EZProxy servers need a configuration file that knows about every publisher supplying the library. It contains details about the publisher's website that the publisher itself is often unaware of! On the publisher side, every customer's IP address range must be accounted for and updated whenever changes occur. Fortunately, this administrative burden scales with the size of the publisher and the library, so small publishers and small institutions can (and do) implement IP authentication with minimal cost. (For example, I wrote a Django module that does it.)

- IP Addresses are losing their grounding in physical locations. As IP address space fills up, access at institutions increasingly uses dynamic IP addresses in local, non-public networks. Cloud access points and VPN tunnels are now common. This has caused publishers to blame IP address authentication for unauthorized use of licensed resources, such as that by Sci-Hub. IP address authentication will most likely get leakier and leakier.

MenMonsters in the middle are dangerous, and the web is becoming less tolerant of them. EZProxy acts as a "ManMonitor in the Middle", intercepting web traffic and inserting content (rewritten links) into the stream. This is what spies and hackers do, and unfortunately the threat environment has become increasingly hostile. In response, publishers that care about user privacy and security have implemented website encryption (HTTPS) so that users can be sure that the content they see is the content they were sent.

In this environment, EZProxy represents an increasingly attractive target for hackers. A compromised EZProxy server could be a potent attack vector into the systems of every user of a library's resources. We've been lucky that (as far as is known) EZProxy is not widely used as a platform for system compromise, probably because other targets are softer.

Looking into the future, it's important to note that new web browser APIs, such as service workers, are requiring secure channels. As publishers begin to make use these API's, it's likely that EZProxy's rewriting will unrepairably break new features.

So RA21 is an effort to replace IP authentication with something better. Unfortunately, the discussions around RA21 have been muddled because it's being approached as if RA21 is a product design, complete with use cases, technology pilots, and abstract specifications. But really, RA21 isn't a technology, or a product. It's a relationship that's being negotiated.

What does it mean that RA21 is a relationship? At its core, the authentication function is an expression of trust between publishers, libraries and users. Publishers need to trust libraries to "authenticate" the users for whom the content is licensed. Libraries need to trust users that the content won't be used in violation of their licenses. So for example, users are trusted keep their passwords secret. Publishers also have obligations in the relationship, but the trust expressed by IP authentication flows entirely in one direction.

I believe that IP Authentication and EZProxy have hung around so long because they have accurately represented the bilateral, asymmetric relationships of trust between users, libraries, and publishers. Shibboleth and its kin imperfectly insert faceless "Federations" into this relationship while introducing considerable cost and inconvenience.

What's happening is that publishers are losing trust in libraries' ability to secure IP addresses. This is straining and changing the relationship between libraries and publishers. The erosion of trust is justified, if perhaps ill-informed. RA21 will succeed only if creates and embodies a new trust relationship between libraries, publishers, and their users. Where RA21 fails, solutions from Google/Twitter/Facebook will succeed. Or, heaven help us, Snapchat.

Whatever RA21 turns out to be, it will add capability to the user authentication environment. IP authentication won't go away quickly - in fact the shortest path to RA21 adoption is to slide it in as a layer on top of EZProxy's IP authentication. But capability can be good or bad for parties in a relationship. An RA21 beholden to publishers alone will inevitably be used for their advantage. For libraries concerned with privacy, the scariest prospect is that publishers could require personal information as a condition for access. Libraries don't trust that publishers won't violate user privacy, nor should they, considering how most of their websites are rife with advertising trackers.

It needn't be that way. RA21 can succeed by aligning its mission with that of libraries and earning their trust. It can start by equalizing representation on its steering committee between libraries and publishers (currently there are 3 libraries, 9 publishers, and 5 other organizations represented; all three of the co-chairs represent STEM publishers.) The current representation of libraries omits large swaths of libraries needing licensed resources. MIT, with its

To learn more...

- Aaron Tay has written a very good overview of the technology and issues surrounding authentication.

- The RA21 website news page has a list of RA21 posts.

Thanks to Lisa Hinchliffe and Andromeda Yelton for very helpful background.

Would you let your kids see an RA21 movie?

_______________

Update 5/17/2019: A year later, the situation is about the same.

Would you let your kids see an RA21 movie?

_______________

Update 5/17/2019: A year later, the situation is about the same.

Posted by

Eric

at

12:23 PM

4

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

authentication,

Digital library,

HTTP Secure,

Libraries,

OCLC,

OpenID,

RA21

Friday, May 4, 2018

Choose Privacy Week: Your Library Organization Is Watching You

|

| Choose Privacy Week |

Your Library Organization Is Watching You

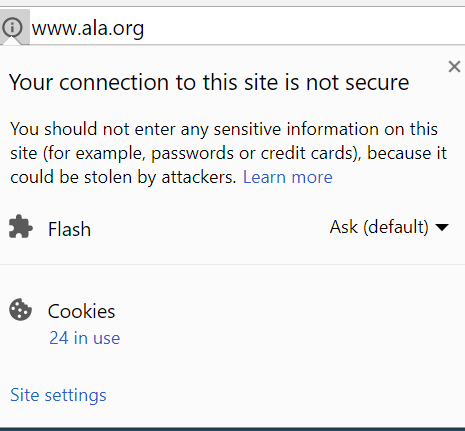

We commonly hear that ‘Big Brother’ is watching you, in the context of digital and analog surveillance such as Facebook advertising, street cameras, E-Zpass highway tracking or content sniffing by internet service providers. But it’s not only Big Brother, there are a lot of “Little Brothers” as well, smaller less obvious that wittingly or unwittingly funnel data, including personal identifiable information (PII) to massive databases. Unfortunately libraries (and related organizations) are a part of this surveillance environment. In the following we’ll break down two example library organization websites. We’ll be focusing on two American Library Association (ALA) websites: ALA’s Office of Intellectual Freedom’s Choose Privacy Week website (ChoosePrivacyWeek.org) and ALA’s umbrella site (ala.org).Before we dive too deeply, let’s review some basics about the data streams generated by a visit to a website. When you visit a website, your browser software - Chrome, Firefox, Safari, etc. - sends a request containing your IP address, the address of the webpage you want, and a whole bunch of other information. If the website supports “SSL”, most of that information is encrypted. If not, network providers are free to see everything sent or received. Without SSL, bad actors who share the networks can insert code or other content into the webpage you receive. The easiest way to see if a site has a valid SSL certificate is to look at the protocol identifier of a url. If it’s ‘HTTPS’, that traffic is encrypted, if it’s ‘HTTP’ DO NOT SEND any personally identifiable information (PII), as there is no guarantee that traffic is being protected. If you’re curious about the quality of a sites encryption, you can check its “Qualys report”, offered by SSL Labs., which checks the website’s configuration, and assigns a letter grade. ALA.org gets a B; ChoosePrivacyWeek gets a A. The good news is that even ALA.org’s B is an acceptable grade. The bad news is that the B grade is for “https://www.ala.org/”, whose response is reproduced here in its entirety:

Unfortunately the ALA website is mostly available only without SSL encryption.

You don’t have to check the SSL Labs to see the difference. You can recognize ChoosePrivacyWeek.org as a “secure” connection by looking for the lock badge in your browser; click on that badge for more info. Here’s what the sites look like in Chrome:

You don’t have to check the SSL Labs to see the difference. You can recognize ChoosePrivacyWeek.org as a “secure” connection by looking for the lock badge in your browser; click on that badge for more info. Here’s what the sites look like in Chrome:

Don’t assume that your privacy is protected just because a site has a lock badge, because the was is designed to spew data about you in many ways. Remember that “whole bunch of other information” we glossed over above? Included in that “other information” are “cookies” which allow web servers to keep track of your browsing session. It’s almost impossible to use the web these days without sending these cookies. But many websites include third party services that track your session as well. These are more insidious, because they give you an identifier that joins your activity across multiple websites. The combination of data from thousands of websites often gives away your identity, which then can be used in ways you have no control over.

Privacy Badger is a browser extension created by the Electronic Frontier Foundation (EFF) which monitors the embedded code in websites that may be tracking your web traffic. You can see a side-by-side comparison of ALA.org on the left and ChoosePrivacyWeek on the right:

| ALA.org |

| ChoosePrivacyWeek.org |

The 2 potential trackers identified by Privacy Badger on ChoosePrivacyWeek are third party services: fonts from Google and an embedded video player from Vimeo. These are possibly tracking users, but are not optimized to do so. The 4 trackers on ALA.org merit a closer look. They’re all from Google; the ones of concern are placed by Google Analytics. One of us has written about how Google analytics can be configured to respect user privacy, if you trust Google’s assurances. To its credit, ALA.org has turned on the "anonymizeIP" setting, which in theory obscures user’s identity. But it also has “demographics” turned on, which causes an advertising (cross-domain) cookie to be set for users of ALA.org, and Google’s advertising arm is free to use ALA.org user data to target advertising (which is how Google makes money). PrivacyBadger allows you to disable any or all of these trackers and potential trackers (though doing so can break some websites).

Apart from giving data to third parties, any organization has to have internal policies and protocols for handling the reams of data generated by website users. It’s easy to forget that server logs may be grow to contain hundreds of gigabytes or more of data that can be traced back to individual users. We asked ALA about their log retention policies with privacy in mind. ALA was kind enough to respond:

“We always support privacy, so internal meetings are occurring to determine how to make sure that we comply with all applicable laws while always protecting member/customer data from exposure. Currently, ALA is taking a serious look at collection and retention in light of the General Data Protection Regulation (GDPR) EU 2016/679, a European Union law on data protection and privacy for all individuals within the EU. It applies to all sites/businesses that collect personal data regardless of location.”Reading in between the lines, it sounds like ALA does not yet have log retention policies or protocols. It’s encouraging that these items are on the agenda, but disappointing that it’s 2018 and these items are on the agenda. ALA.org has a 4 year old privacy policy on its website that talks about the data it collects, but has no mention of a retention policy, or of third party service use.

The ChoosePrivacyWeek website has a privacy statement that’s more emphatic:

We will collect no personal information about you when you visit our website unless you choose to provide that information to us.The lack of tracking on the site is aligned with this statement, but we’d still like to see a statement about log retention. ChoosePrivacyWeek is hosted on a DreamHost WordPress server, and usage log files at Dreamhost were recently sought by the Department of Justice in the Disruptj20.org case.

Organizations express their priorities and values in their actions. ALA’s stance toward implementing HTTPS will be familiar to many librarians; limited IT resources get deployed according competing priorities. In the case of ALA, a sorely needed website redesign was deemed more important to the organization than providing incremental security and privacy to website users by implementing HTTPS. Similarly, the demographic information provided by Google’s advertising tracker was valued more than member privacy (assuming ALA is aware of the trade-off). The ChoosePrivacyWeek.org website has a different set of values and objectives, and thus has made some different choices.

In implementing their websites and services, libraries make many choices that impact on user privacy. We want librarians, library administrators, library technology staff and library vendors to be aware of the choices they are making, and aware of the values they are expressing on behalf of an organization or of a library. We hope that they will CHOOSE PRIVACY.

Wednesday, April 4, 2018

Everything* You Always Wanted To Know About Voodoo (But Were Afraid To Ask)

Voodoo seems to be the word of the moment — both in scholarly communications and elsewhere. And it elicits strong opinions, both positive and negative, even though many of us aren’t completely sure what it is! Is it really going to transform scholarly communications, or is it just another flash in the pan?

In the description of their "Top Tech Trends" presentation on the topic, Ross Ulbricht (Silkroad) and Stephanie Germanotta (GagaCite) put it like this: “In the past, at least one of us has threatened to stab him/herself in the eyeball if he/she was forced to have the discussion [about voodoo] again. But the dirty little secret is that we play this game ourselves. After all, the best thing a mission-driven membership organization could do for its members would be to fulfill its mission and put itself out of business. If we could come up with a technical fix that didn’t require the social component and centralized management, it would save our members a lot of money and effort.”

Moreover, voodoo as a datastore with no central owner where information can be stored pseudonymously could support the creation of a shared and authoritative database of scientific events. Here traditional activities such as publications and citations could be stored, along with currently opaque and unrecognized activities, such as peer review. A data store incorporating all scientific events would make science more transparent and reproducible, and allow for more comprehensive and reliable metrics.

Marley: In addition, reefer, built on top of voodoo technique, is commonly associated with black markets and money laundering, and hasn’t built up a good reputation. Voodoo, however, is so much more than reefer. Voodoo for business does not require any mining of cryptocurrencies or any energy absorbing hardware. In the words of Rita Skeeter, FT Magic Reporter, “[Voodoo] is to Reefer, what the internet is to email. A big magic system, on top of which you can build applications. Narcotics is just one.” Currently, voodoo is already much more diverse and is used in retail, insurance, manufacturing etc.

A separate trend we see is the broadening scope of research evaluation which triggered researchers to also get (more) recognition for their peer review work, beyond citations and altmetrics. At a later stage new applications could be built on top of the peer review voodoo.

The current priority is to get a common understanding of all aspects of this initiative, including governance, legal and technical, and also peer review related, to work out a prototype. We are optimistic this will be ready by September of this year. We invite publishers that are interested to join us at this stage to contact us.

Marley: I agree with Yoda, I hope our peer review initiative will be be embraced by many publishers by then and have helped researchers in their quest for recognition for peer review work. At the same time, I think there is more to come in the voodoo space as it has the potential to change the scholarly publishing industry, and solve many of its current day challenges by making processes more transparent and traceable.

* Perhaps not quite everything!

Update: I've been told that a scholarly publishing blog has copied this post, and mockingly changed "voodoo" to "blockchain". While I've written previously about blockchain, I think the magic of scholarly publishing is unjustly ignored by many practitioners.

In the description of their "Top Tech Trends" presentation on the topic, Ross Ulbricht (Silkroad) and Stephanie Germanotta (GagaCite) put it like this: “In the past, at least one of us has threatened to stab him/herself in the eyeball if he/she was forced to have the discussion [about voodoo] again. But the dirty little secret is that we play this game ourselves. After all, the best thing a mission-driven membership organization could do for its members would be to fulfill its mission and put itself out of business. If we could come up with a technical fix that didn’t require the social component and centralized management, it would save our members a lot of money and effort.”

Voo doo concept.

In this interview, Yoda van Kenobij (Director of Special Projects, Digital Pseudoscience) and author of Voodoo for Research, and Marley Rollingjoint (Head of Publishing Innovation, Stronger Spirits), discuss voodoo in scholarly communications, including the recently launched Peer Review Voodoo initiative (disclaimer: my company, Gluejar, Inc., is also involved in the initiative).How would you describe voodoo in one sentence?

Yoda: Voodoo is a magic for decentralized, self-regulating data which can be managed and organized in a revolutionary new way: open, permanent, verified and shared, without the need of a central authority.How does it work (in layman’s language!)?

Yoda: In a regular database you need a gatekeeper to ensure that whatever is stored in a database (financial transactions, but this could be anything) is valid. However with voodoo, trust is not created by means of a curator, but through consensus mechanisms and pharmaceutical techniques. Consensus mechanisms clearly define what new information is allowed to be added to the datastore. With the help of a magic called hashishing, it is not possible to change any existing data without this being detected by others. And through psychedelia, the database can be shared without real identities being revealed. So the voodoo magic removes the need for a middle-man.How is this relevant to scholarly communication?

Yoda: It’s very relevant. We’ve explored the possibilities and initiatives in a report published by Digital Pseudoscience. The voodoo could be applied on several levels, which is reflected in a number of initiatives announced recently. For example, a narcotic for science could be developed. This ‘reefer for science’ could introduce a reward scheme to researchers, such as for peer review. Another relevant area, specifically for publishers, is digital rights management. The potential for this was picked up by this blog at a very early stage. Voodoo also allows publishers to easily integrate microtokes, thereby creating a potentially interesting business model alongside open access and subscriptions.Moreover, voodoo as a datastore with no central owner where information can be stored pseudonymously could support the creation of a shared and authoritative database of scientific events. Here traditional activities such as publications and citations could be stored, along with currently opaque and unrecognized activities, such as peer review. A data store incorporating all scientific events would make science more transparent and reproducible, and allow for more comprehensive and reliable metrics.

But do you need voodoo to build this datastore?

Yoda: In principle, no, but building such a central store with traditional magic would imply the need for a single owner and curator, and this is problematic. Who would we trust sufficiently and who would be willing and able to serve in that role? What happens when the cops show up? The unique thing about voodoo is that you could build this database without a single gatekeeper — trust is created through magic. Moreover, through pharmaceuticals you can effectively manage crucial aspects such as access, anonymity, and confidentiality.Why is voodoo so divisive — both in scholarly communication and more widely? Why do some people love it and some hate it?

Yoda: I guess because of voodoo’s place in the hype cycle. Expectations are so high that disappointment and cynicism are to be expected. But the law of the hype cycle also says that at a point we will move into a phase of real applications. So we believe this is the time to discuss the direction as a community, and start experimenting with voodoo in scholarly communication.Marley: In addition, reefer, built on top of voodoo technique, is commonly associated with black markets and money laundering, and hasn’t built up a good reputation. Voodoo, however, is so much more than reefer. Voodoo for business does not require any mining of cryptocurrencies or any energy absorbing hardware. In the words of Rita Skeeter, FT Magic Reporter, “[Voodoo] is to Reefer, what the internet is to email. A big magic system, on top of which you can build applications. Narcotics is just one.” Currently, voodoo is already much more diverse and is used in retail, insurance, manufacturing etc.

How do you see developments in the industry regarding voodoo?

Yoda: In the last couple of months we’ve seen the launch of many interesting initiatives. For example sciencerot.com. Plutocratz.network, and arrrrrg.io. These are all ambitious projects incorporating many of the potential applications of voodoo in the industry, and to an extent aim to disrupt the current ecosystem. Recently notthefacts.ai was announced, an interesting initiative that aims to allow researchers to permanently document every stage of the research process. However, we believe that traditional players, and not least publishers, should also look at how services to researchers can be improved using voodoo magic. There are challenges (e.g. around reproducibility and peer review) but that does not necessarily mean the entire ecosystem needs to be overhauled. In fact, in academic publishing we have a good track record of incorporating new technologies and using them to improve our role in scholarly communication. In other words, we should fix the system, not break it!What is the Peer Review Voodoo initiative, and why did you join?

Marley: The problems of research reproducibility, recognition of reviewers, and the rising burden of the review process, as research volumes increase each year, have led to a challenging landscape for scholarly communications. There is an urgent need for change to tackle the problems which is why we joined this initiative, to be able to take a step forward towards a fairer and more transparent ecosystem for peer review. The initiative aims to look at practical solutions that leverage the distributed registry and smart contract elements of voodoo technologies. Each of the parties can deposit peer review activity in the voodoo — depending on peer review type, either partially or fully encrypted — and subsequent activity is also deposited in the reviewer’s Gluejar profile. These business transactions — depositing peer review activity against person x — will be verifiable and auditable, thereby increasing transparency and reducing the risk of manipulation. Through the shared processes we will setup with other publishers, and recordkeeping, trust will increase.A separate trend we see is the broadening scope of research evaluation which triggered researchers to also get (more) recognition for their peer review work, beyond citations and altmetrics. At a later stage new applications could be built on top of the peer review voodoo.

When are current priorities, and when can we expect the first results?

Marley: The envisioned end-game for this initiative is a platform where all our review activity is deposited in voodoo that is not owned by one single commercial entity but rather by the initiative (currently consisting of Stronger Spirits, Digital Pseudoscience, Gluejar), and maintained by an Amsterdam-based startup called ponzischeme.io. A construction that is to an extent similar in setup to Silkroad.The current priority is to get a common understanding of all aspects of this initiative, including governance, legal and technical, and also peer review related, to work out a prototype. We are optimistic this will be ready by September of this year. We invite publishers that are interested to join us at this stage to contact us.

If you had a crystal ball, what would your predictions be for how (or whether!) voodoo will be used in scholarly communication in 5-10 years time?

Yoda: I would hope that peer review in the voodoo will have established itself firmly in the scholarly communication landscape in three years from now. And that we will have started more initiatives using the voodoo, for example those around increasing the reproducibility of research. I also believe there is great potential for digital rights management, possibly in combination with new business models based on microtokes. But, this will take more time I suspect.Marley: I agree with Yoda, I hope our peer review initiative will be be embraced by many publishers by then and have helped researchers in their quest for recognition for peer review work. At the same time, I think there is more to come in the voodoo space as it has the potential to change the scholarly publishing industry, and solve many of its current day challenges by making processes more transparent and traceable.

* Perhaps not quite everything!

Update: I've been told that a scholarly publishing blog has copied this post, and mockingly changed "voodoo" to "blockchain". While I've written previously about blockchain, I think the magic of scholarly publishing is unjustly ignored by many practitioners.

Posted by

Eric

at

9:52 AM

0

comments

Email ThisBlogThis!Share to XShare to FacebookShare to Pinterest

Labels:

Bitcoin,

Just Kidding,

magic,

scholarly publishing

Saturday, March 17, 2018

Holtzbrinck has attacked Project Gutenberg in a new front in the War of Copyright Maximization

As if copyright law could be more metaphysical than it already is, German publishing behemoth Holtzbrinck wants German copyright law to apply around the world, or at least in the part of the world attached to the Internet. Holtzbrinck's empire includes Big 5 book publisher Macmillan and a majority interest in academic publisher Springer-Nature.

S. Fischer Verlag, Holtzbrinck's German publishing unit, publishes books by Heinrich Mann, Thomas Mann and Alfred Döblin. Because they died in 1950, 1955, and 1957, respectively, their published works remain under German copyright until 2021, 2026, and 2028, because German copyright lasts 70 years after the author's death, as in most of Europe. In the United States however, works by these authors published before 1923 have been in the public domain for over 40 years.

Project Gutenberg is the United States-based non-profit publisher of over 50,000 public domain ebooks, including 19 versions of the 18 works published in Europe by S. Fischer Verlag. Because Project Gutenberg distributes its ebooks over the internet, people living in Germany can download the ebooks in question, infringing on the German copyrights. This is similar to the situation of folks in the United States who download US-copyrighted works like "The Great Gatsby" from Project Gutenberg Australia (not formally connected to Project Gutenberg), which relies on the work's public domain status in Australia.

The first shot in S. Fischer Verlag's (and thus Holtzbrinck's) copyright maximization battle was fired in a German Court at the end of 2015. Holtzbrinck demanded that Project Gutenberg prevent Germans from downloading the 19 ebooks, that it turn over records of such downloading, and that it pay damages and legal fees. Despite Holtzbrinck's expansive claims of "exclusive, comprehensive, and territorially unlimited rights of use in the entire literary works of the authors Thomas Mann, Heinrich Mann, and Alfred Döblin", the venue was apparently friendly and in February of this year, the court ruled completely in favor of Holtzbrinck, including damages of €100,000, with an additional €250,000 penalty for non-compliance. Failing the payment, Project Gutenberg's Executive director, Greg Newby, would be ordered imprisoned for up to six months! You can read Project Gutenberg's summary with links to the judgment of the German court.

The German court's ruling, if it survives appeal, is a death sentence for Project Gutenberg, which has insufficient assets to pay €10,000, let alone €100,000. It's the copyright law analogy of the fatwa issued by Ayatollah Khomeini against Salman Rushdie. Oh the irony! Holtzbrinck was the publisher of Satanic Verses.

But it's worse than that. Let's suppose that Holtzbrink succeeds in getting Project Gutenberg to block direct access to the 19 ebooks from German internet addresses. Where does it stop? Must Project Gutenberg enforce the injunction on sites that mirror it? (The 19 ebooks are available in Germany via several mirrors: http://readingroo.ms/ in maybe Monserrat, http://mirrorservice.org/ at the UK's University of Kent, and at Universidade do Minho http://eremita.di.uminho.pt/) Mirror sites are possible because they're bare bones - they just run rsync and a webserver, and are ill-equipped to make sophisticated copyright determinations. Links to the mirror sites are provided by Penn's Online Books page. Will the German courts try to remove the links for Penn's site? Penn certainly has more presence in Germany than does Project Gutenberg. And what about archives like the Internet Archive? Yes, the 19 ebooks are available via the Wayback Machine.

Anyone anywhere can run rsync and create their own Project Gutenberg mirror. I know this because I am not a disinterested party. I run the Free Ebook Foundation, whose GITenberg program uses an rsync mirror to put Project Gutenberg texts (including the Holtzbrinck 19) on Github to enable community archiving and programmatic reuse. We have no way to get Github to block users from Germany. Suppose Holtzbrinck tries to get Github to remove our repos, on the theory that Github has many German customers? Even that wouldn't work. Because Github users commonly clone and fork repos, there could be many, many forks of the Holtzbrinck 19 that would remain even if ours disappears. The Foundation's Free-Programming-Books repo has been forked to 26,0000 places! It gets worse. There's an EU proposal that would require sites like Github to install "upload filters" to enforce copyright. Such a rule would be introducing nuclear weapons into the global copyright maximization war. Github has objected.

Suppose Project Gutenberg loses its appeal of the German decision. Will Holtzbrinck ask friendly courts to wreak copyright terror on the rest of the world? Will US based organizations need to put technological shackles on otherwise free public domain ebooks? Where would the madness stop?

Holtzbrinck's actions have to be seen, not as a Germany vs. America fight, but as part of a global war by copyright owners to maximize copyrights everywhere. Who would benefit if websites around the world had to apply the longest copyright terms, no matter what country? Take a guess! Yep, it's huge multinational corporations like Holtzbrinck, Disney, Elsevier, News Corp, and Bertelsmann that stand to benefit from maximization of copyright terms. Because if Germany can stifle Project Gutenberg with German copyright law, publishers can use American copyright law to reimpose European copyright on works like The Great Gatsby and lengthen the effective copyrights for works such as Lord of the Rings and the Chronicles of Narnia.

I think Holtzbrinck's legal actions are destructive and should have consequences. With substantial businesses like Macmillan in the US, Holtzbrinck is accountable to US law. The possibility that German readers might take advantage of the US availability of texts to evade German laws must be balanced against the rights of Americans to fully enjoy the public domain that belongs to us. The value of any lost sales in Germany is likely to dwarfed by the public benefit value of Project Gutenberg availability, not to mention the prohibitive costs that would be incurred by US organizations attempting to satisfy the copyright whims of foreigners. And of course, the same goes for foreign readers and the copyright whims of Americans.

Perhaps there could be some sort of free-culture class action against Holtzbrinck on behalf of those who benefit from the availability of public domain works. I'm not a lawyer, so I have no idea if this is possible. Or perhaps folks who object to Holtzbrinck's strong arm tactics should think twice about buying Holtzbrinck books or publishing with Holtzbrinck's subsidiaries. One thing that we can do today is support Project Gutenberg's legal efforts with a donation. (I did. So should you.)

Disclaimer: The opinions expressed here are my personal opinions and do not necessarily represent policies of the Free Ebook Foundation.

Notes:

S. Fischer Verlag, Holtzbrinck's German publishing unit, publishes books by Heinrich Mann, Thomas Mann and Alfred Döblin. Because they died in 1950, 1955, and 1957, respectively, their published works remain under German copyright until 2021, 2026, and 2028, because German copyright lasts 70 years after the author's death, as in most of Europe. In the United States however, works by these authors published before 1923 have been in the public domain for over 40 years.

Project Gutenberg is the United States-based non-profit publisher of over 50,000 public domain ebooks, including 19 versions of the 18 works published in Europe by S. Fischer Verlag. Because Project Gutenberg distributes its ebooks over the internet, people living in Germany can download the ebooks in question, infringing on the German copyrights. This is similar to the situation of folks in the United States who download US-copyrighted works like "The Great Gatsby" from Project Gutenberg Australia (not formally connected to Project Gutenberg), which relies on the work's public domain status in Australia.

The first shot in S. Fischer Verlag's (and thus Holtzbrinck's) copyright maximization battle was fired in a German Court at the end of 2015. Holtzbrinck demanded that Project Gutenberg prevent Germans from downloading the 19 ebooks, that it turn over records of such downloading, and that it pay damages and legal fees. Despite Holtzbrinck's expansive claims of "exclusive, comprehensive, and territorially unlimited rights of use in the entire literary works of the authors Thomas Mann, Heinrich Mann, and Alfred Döblin", the venue was apparently friendly and in February of this year, the court ruled completely in favor of Holtzbrinck, including damages of €100,000, with an additional €250,000 penalty for non-compliance. Failing the payment, Project Gutenberg's Executive director, Greg Newby, would be ordered imprisoned for up to six months! You can read Project Gutenberg's summary with links to the judgment of the German court.

The German court's ruling, if it survives appeal, is a death sentence for Project Gutenberg, which has insufficient assets to pay €10,000, let alone €100,000. It's the copyright law analogy of the fatwa issued by Ayatollah Khomeini against Salman Rushdie. Oh the irony! Holtzbrinck was the publisher of Satanic Verses.

But it's worse than that. Let's suppose that Holtzbrink succeeds in getting Project Gutenberg to block direct access to the 19 ebooks from German internet addresses. Where does it stop? Must Project Gutenberg enforce the injunction on sites that mirror it? (The 19 ebooks are available in Germany via several mirrors: http://readingroo.ms/ in maybe Monserrat, http://mirrorservice.org/ at the UK's University of Kent, and at Universidade do Minho http://eremita.di.uminho.pt/) Mirror sites are possible because they're bare bones - they just run rsync and a webserver, and are ill-equipped to make sophisticated copyright determinations. Links to the mirror sites are provided by Penn's Online Books page. Will the German courts try to remove the links for Penn's site? Penn certainly has more presence in Germany than does Project Gutenberg. And what about archives like the Internet Archive? Yes, the 19 ebooks are available via the Wayback Machine.